Swifties, this one’s for you. It seems like Taylor Swift's Eras Tour has lasted eons. Yet somehow, there’s always something to talk about. Just thinking about how much she’s accomplished while on tour makes me want to buckle down, lock in, and channel my inner girlboss. But while I can’t even be bothered to cook dinner at home after a long day of work, Taylor is accomplishing milestones most musicians can only dream of. Let’s recap.

The Era’s Tour began in March 2023 with its North American leg. It’s set to go until December 2024, with dates in Europe, Australia, Asia, and South America— spanning 152 shows across five continents.

As the queen of multitasking, Swift hasn’t stopped at just selling out stadiums. Since the Eras tour began, she’s released multiple albums — both new and old — and shaken up the tour setlist with each new release. Her list of new releases started on the first day of tour with “All Of The Girls You Loved Before,” which was quickly followed up by “The Alcott,” a feature on The National’s album — reciprocity for their work on her pandemic era albums, Folklore and Evermore.

She also released Midnights: Late Night Edition (including the iconic collab with Ice Spice), as well as not one but two album re-releases — Speak Now Taylor's Version and 1989 Taylor's Version. As if that wasn’t enough, she announced her latest album, The Tortured Poet’s Department, in a GRAMMY’s acceptance speech. Talk about legendary. Since its release, she’s also been churning out deluxe versions and remixes to keep us on our toes. The Eras Tour was even made into a Blockbuster film that brought Beyonce to its premiere. Star power: confirmed.

But that’s just her work life. Her personal life is just as eventful. She ended her 7-year relationship with Joe Alwyn in April 2023. Then entered into a brief but controversial fling with 1975 frontman Matty Healy. Though it didn’t last long, the relationship was enough to inspire a whole album and catapult her into her current romance with Travis Kelce, aka Amerca’s first nepo boyfriend. Now they’re the American Royal couple — and she somehow had time to fly from tour to his Super Bowl performance.

We all have the same hours in the day as Taylor Swift, but how she uses them will always be a mystery to me. I work eight hours a day and can barely manage a social life. Meanwhile, Taylor literally has it all — though conservatives are turning on her for daring to be a woman in her 30s who’s not married with kids. If that’s not proof that women can’t do anything right, I don’t know what is.

Clearly, she’s working late because she’s a singer. No wonder Taylor Swift became a billionaire months into her tour in October 2023. Her net worth is currently around 1.3 billion dollars, making her the only female musician to become a billionaire from her music.

Other entertainment billionaires like Rihanna, Kylie Jenner, Kim Kardashian, Jay-Z, and Kanye West have joined the three-comma club thanks to ventures like clothing brands, beauty products, and other entrepreneurial pursuits. Rihanna has her FENTY Empire. Kim has her award-winning SKIMS. Ye had Yeezy. But Taylor has an unbeatable catalog of publishing.

But Taylor isn’t just different from other Billionaires because of how she earned her money. She’s the Taylor we know and love because of how she spends it. Her rollercoaster Eras Tour is how she’s made much of her fortune. And she’s using it to give back in monumental degrees. From individual donations to investing in local infrastructure, Taylor is literally changing lives on a macro and micro scale. And teaching us what to expect from all billionaires in the process.

The Era’s Tour Bonuses — Talk About Workplace Benefits

First to make headlines were the Eras Tour crew bonuses. While some of us get rewarded with a pizza party or a $10 gift card to Starbucks, Taylor casually dropped $55 million in bonuses for her tour crew. The massive sum was paid out to everyone who makes the Eras Tour go around, from truck drivers to dancers and sound technicians.

In fairness, these bonuses are definitely well-deserved. Taylor’s shows are over three hours long. Imagine dancing for that long — because Swift certainly isn’t the one with the impressive moves — for hundreds of tour dates. Or remembering countless combinations of light cues to go with a setlist that changes daily. Yeah, they’re clocking in. And if my boss had millions to blow, I’d be expecting a comfortable bonus too. But $55 Million? That’s a testament to Swift’s generosity. It's like she's Oprah, but instead of cars, she's giving out life-changing amounts of cash. "You get a bonus! You get a bonus! Everybody gets a bonus!"

It’s similar to how Zendaya gave film equity to every member of the crew that worked on her controversial black-and-white drama, Malcolm & Marie. Filmed in a few days with a bare-bones crew during the peak of the pandemic, the film was Zendaya’s passion project with Sam Levinson, in which she starred alongside John David Washington. Though the film got mixed reviews, it captured the audience’s attention all the same. After all, it was Zendaya — and we’ll watch her in anything. So since the film sold to Netflix for a hefty sum, all the crew members got payouts from the deal on top of their salaries to reward their hard work.

Bonuses and equity payouts are common in many industries, but not entertainment. Even though it’s one of the most lucrative and recognizable American industries, most entertainers don’t make enough to survive. The SAG and WGA strikes last year were proof that there needs to be systemic change in the industry. LA County has even identified show businesses as risk factors for being unhoused — after all, how many stories do we hear of actors who were living in their cars before their big break? And for many, their big break never comes. For even more, they get hired on amazing gigs with giant performers … then go right back to the grind afterward. While individual actions from our favorite stars won’t fix everything, Zendaya and Taylor are providing models for how Hollywood should treat the people who make this town go round.

And in this economy, even a little bit could go a long way. Inflation and the cost of living are not a joke. Especially when, like with many creative careers, you often have to invest in lessons or equipment for your craft. With all this considered, the impact of Swirt’s donations can’t be overstated. Imagine getting a lump sum of cash for dancing to your favorite Taylor Swift tracks? Talk about a dream job.

The Economic Impact of Swift - Swiftonomics, if you will

Like Barbie and Beyonce last year, Swift is still on a tear to boost the economy of the cities she’s in just by traveling there — ad inspiring others to make the trek, too.

The Barbie movie proved that by marketing to women (instead of just making Marvel flops like Madame Web that aren’t really targeted to women at all), the entertainment industry can make giant profits. Barbie fever went beyond the theater. Thanks to a plethora of product collabs, the phenomenon rippled through retail.

Similarly, Beyonce’s Renaissance Tour tour generated an estimated $4.5 billion for the American economy. According to NPR, that’s almost as much as the entire 2008 Olympics earned for Beijing. People were taking money out of their 401ks to pay for Beyonce tickets and the glittery, silver-hues outfits to rock at her shows. Cities even started calling her effect the “Beyonce Bump.”

Swift has the same effect. She’s not just proving her generosity on a micro-scale for the people close to her, she’s having actual, tangible effects on the economy. It's like she's leaving a trail of dollar bills in her wake, and cities are scrambling to catch them like it's a country-pop, capitalist version of musical chairs.

The US Travel Association called it the Taylor Swift Impact after she generated over $5 Billion in just the first 5 months of the Eras Tour. But how does this work? It’s not like Taylor is printing more money at those shows, but it almost is. Her tour dates are pretty much economic steroid shots for local businesses. Hotels are booked solid, restaurants are packed, and let's not even get started on the surge in friendship bracelet supplies.

“Swifties averaged $1,300 of spending in local economies on travel, hotel stays, food, as well as merchandise and costumes,” say the US Travel Association. “That amount of spending is on par with the Super Bowl, but this time it happened on 53 different nights in 20 different locations over the course of five months.” That’s not to say anothing of her effect on the actual Super Bowl and the entire NFL season thanks to her ball-throwing boyfriend.

It's like she's created her own micro-economy, and everyone's invited to the party. And unlike some economic theories that rely on wealth trickling down (spoiler alert: it doesn't), Taylor's wealth is more like a t-shirt cannon or the confetti at her shows — showering everyone around.

Donations that actually do good

Taylor isn’t just stepping into cities and calling it a night. She’s also not just throwing pennies at problems - she's making significant contributions that are changing lives. And more importantly, she's using her platform to encourage her fans to do the same.

She kicked off her tour with quiet donations to food banks in Glendale, Ariz., and Las Vegas ahead of the Eras Tour. Once the tour was in full swing, she continued this practice. In Seattle, she donated to Food Lifeline, a local hunger relief organization. In Santa Clara, she showed some love to Second Harvest of Silicon Valley. And let's not forget about her $100,000 donation to the Hawkins County School Nutrition Program in Tennessee.

She’s been making similar donations overseas. Taylor Swift donated enough money to cover the food bills for an entire year across 11 food banks and & community pantries in Liverpool. Swift also covered 10,800 meals for Cardiff Foodbank and many more banks across the UK and EU. Her impact is so profound that her numbers are doing more to combat issues like hunger than the government.

Can billionaires actually be good?

One thing about me, I’m always ready and willing — knife and fork in hand — to eat the rich. Because fundamentally, can any billionaire really be good? In our late-stage capitalist horror story, the answer is usually no. Look how many of them are supporting the Trump campaign just to get some tax breaks.

But here's the thing - Taylor Swift might just be the exception that proves the rule. She's not perfect, sure. She still flies private jets and probably has a carbon footprint bigger than Bigfoot. But unlike most of the others in her tax bracket, she's not flaunting her wealth like it's a personality trait.

Take a look around. We've got billionaires trying to colonize Mars instead of, I don't know, helping people on Earth. In this context, Taylor's approach is more like Mackenzie Scott’s — Bezos’s ex-wife. She's not trying to escape to another planet - she's trying to make this one better.

And look, I'm not saying we should stop critiquing billionaires or the system that creates them. But she's just setting the bar for what we should expect from all billionaires. She's showing us that our collective power as fans can translate into real-world change. That our love for catchy choruses and bridge drops can somehow, improbably, lead to food banks getting funded and crew members getting life-changing bonuses.

So sorry to my neighbors who hear me belting “Cruel Summer” and “right where you left me” at the top of my lungs (and range). Just know it’s for the greater good.

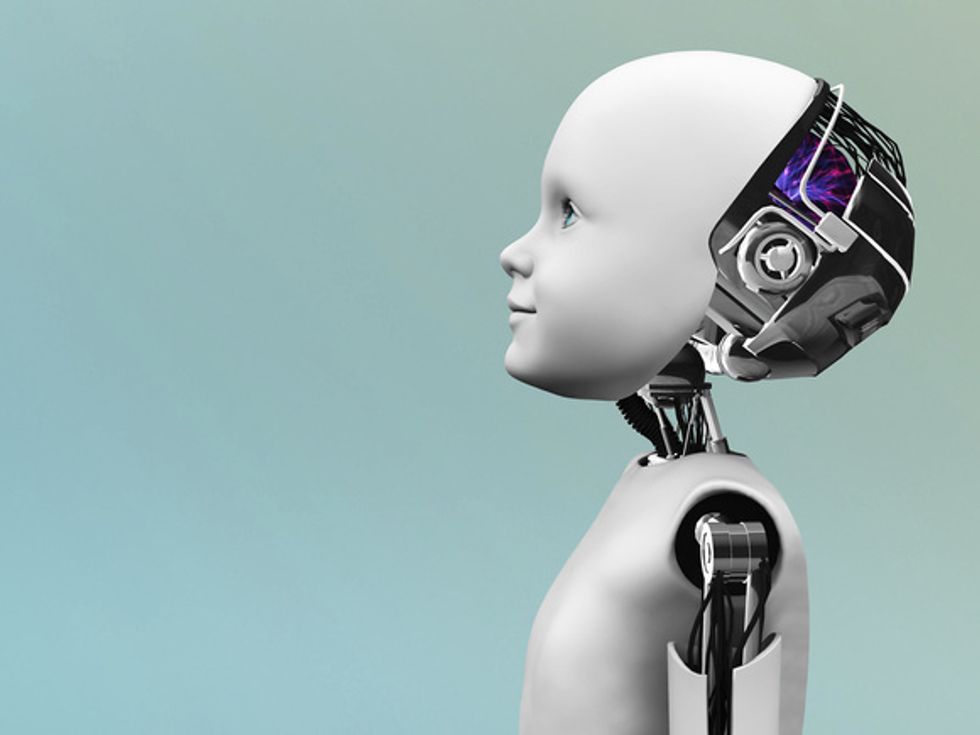

Researchers Have Created an AI Too Dangerous to Release. What Will Happen When It Gets Out?

The GPT-2 software can generate fake news articles on its own. Its creators believe its existence may pose an existential threat to humanity. But it could also present a chance to intervene.

Researchers at OpenAI have created an artificial intelligence software so powerful that they have deemed it too dangerous for public release.

The software, called GPT-2, can generate cohesive, coherent text in multiple genres—including fiction, news, and unfiltered Internet rants—making it a prime candidate for creating fake news or fake profiles should it fall into the wrong hands.

Fears like this led the Elon Musk-founded company OpenAI to curtail the software's release. "Due to our concerns about malicious applications of the technology, we are not releasing the trained model," they announced in a blog post. "As an experiment in responsible disclosure, we are instead releasing a much smaller model for researchers to experiment with, as well as a technical paper."

In addition to writing a cohesive fictional story based on Lord of the Rings, the software wrote a logical scientific report about the discovery of unicorns. "In a shocking finding, scientists discovered a herd of unicorns living in a remote, previously unexplored valley, in the Andes Mountains," the software wrote. "Even more surprising to the researchers was the fact that the unicorns spoke perfect English. The scientist named the population, after their distinctive horn, Ovid's Unicorn. These four-horned, silver-white unicorns were previously unknown to science."

This journalistic aptitude sparked widespread fears that AI technologies as sophisticated as the GPT-2 could influence upcoming elections, potentially generating unfathomable amounts of partisan content in a single instant. "The idea here is you can use some of these tools in order to skew reality in your favor," said University of Washington professor Ryan Calo. "And I think that's what OpenAI worries about."

Elon Musk quit OpenAI in 2018, but his legacy of fear and paranoia regarding AI and its potential evils lives on. The specter of his caution was likely instrumental in keeping GPT-2 out of the public sphere. "It's quite uncanny how it behaves," echoed Jack Clark, policy director of OpenAI, when asked about his decision to keep the new software under locks.

In a world already plagued by fake news, cat-fishing, and other forms of illusion made possible by new technology, AI seems like a natural next step in the dizzying sequence of illusion and corruption that has rapidly turned the online world from a repository of cat videos (the good old days) to today's vortex of ceaselessly reproduced lies and corrupted content. Thinkers like Musk have long called for resistance against AI's unstoppable growth. In 2014, Musk called AI the single largest "existential threat" to humanity. That same year, the late physicist Stephen Hawking ominously predicted that sophisticated AI could "spell the end of the human race."

But until AI achieves the singularity—a level of consciousness where it achieves and supersedes human intelligence—it is still privy to the whims of whoever is controlling it. Fears about whether AI will lend itself to fake news are essentially fears of things humans have already done. All the evil at work on the Internet has had a human source.

When it comes down to the wire, for now, AI is a weapon.

When AI is released into the world, a lot could happen. AI could become a victim, a repository for displaced human desire. Some have questioned whether people should be allowed to treat humanoid creatures in whatever ways they wish to. Instances of robot beheadings and other violent behaviors towards AI hint towards a darker trend that could emerge should AI become a free-for-all, a humanoid object that can be treated in any way on the basis of its presumed inhumanity.

Clearly, AI and humanity have a complex and fundamentally intertwined relationship, and as we all become more dependent on technology, there is less of a clear line dividing the human from the robotic. As a manmade invention, AI will inevitably emulate the traits (as well as the stereotypes) of the people who created it. It could also take on the violent tendencies of its human creators. Some thinkers have sounded the alarm about this, questioning the dearth of ethics in Silicon Valley and in the tech sphere on the whole. Many people believe that AI (and technology in general) is fundamentally free of bias and emotion, but a multitude of examples have shown that this is untrue, including instances where law enforcement software systems displayed racist bias against black people (based on data collected by humans).

AI can be just as prejudiced and close-minded as a human, if not more so, especially in its early stages where it is not sophisticated enough to think critically. An AI may not feel in and of itself, but—much like we learn how to process the world from our parents—it can learn how to process and understand emotions from the people who create it, and from the media it absorbs.

Their quandary may not be so different from the struggle parents face when deciding whether to allow their children to watch R-rated movies. In this case, both the general public and the AIs are the children, and the scientists, coders, and companies peddling new inventions are the parents. The people designing AIs have to determine the extent to which they can trust the public with their work. They also have to determine which aspects of humanity they want to expose their inventions to.

OpenAI may have kept their kid safe inside the house a little longer by freezing the GPT-2, but that kid is growing—and when it goes out into the world, it could change everything. For better or worse, at some point, super-intelligent AI is going to wind up in the public's hands. Now, during its tender, formative stages, there is still a chance to shape it into whom it's going to be when it arrives.

Eden Arielle Gordon is a writer and musician from New York City. Talk to her about AI on Twitter @edenarielmusic.